Zephyr-7B Advances from Mistral-7B

Zephyr is a new series of language models designed to be helpful AI assistants. The first model, Zephyr-7B-α, is a fine-tuned version of It was trained using Direct Preference Optimization (DPO) on publicly available datasets.

Researchers found removing dataset alignment improved performance on assistive benchmarks while increasing risks. Zephyr-7B-α should only be used cautiously for research purposes currently.

Technical Advances in Zephyr-7B

Zephyr-7B represents an incremental evolution over Mistral-7B. While the underlying architecture is similar, Zephyr-7B integrates new techniques:

- Multi-layer perceptron mixers enable more complex token interactions and improved language understanding.

- Sparse attention focuses tokens on the most relevant inputs, increasing efficiency.

Combined with other optimizations, Zephyr-7B achieves strong accuracy and speed.

Using Zephyr-7B

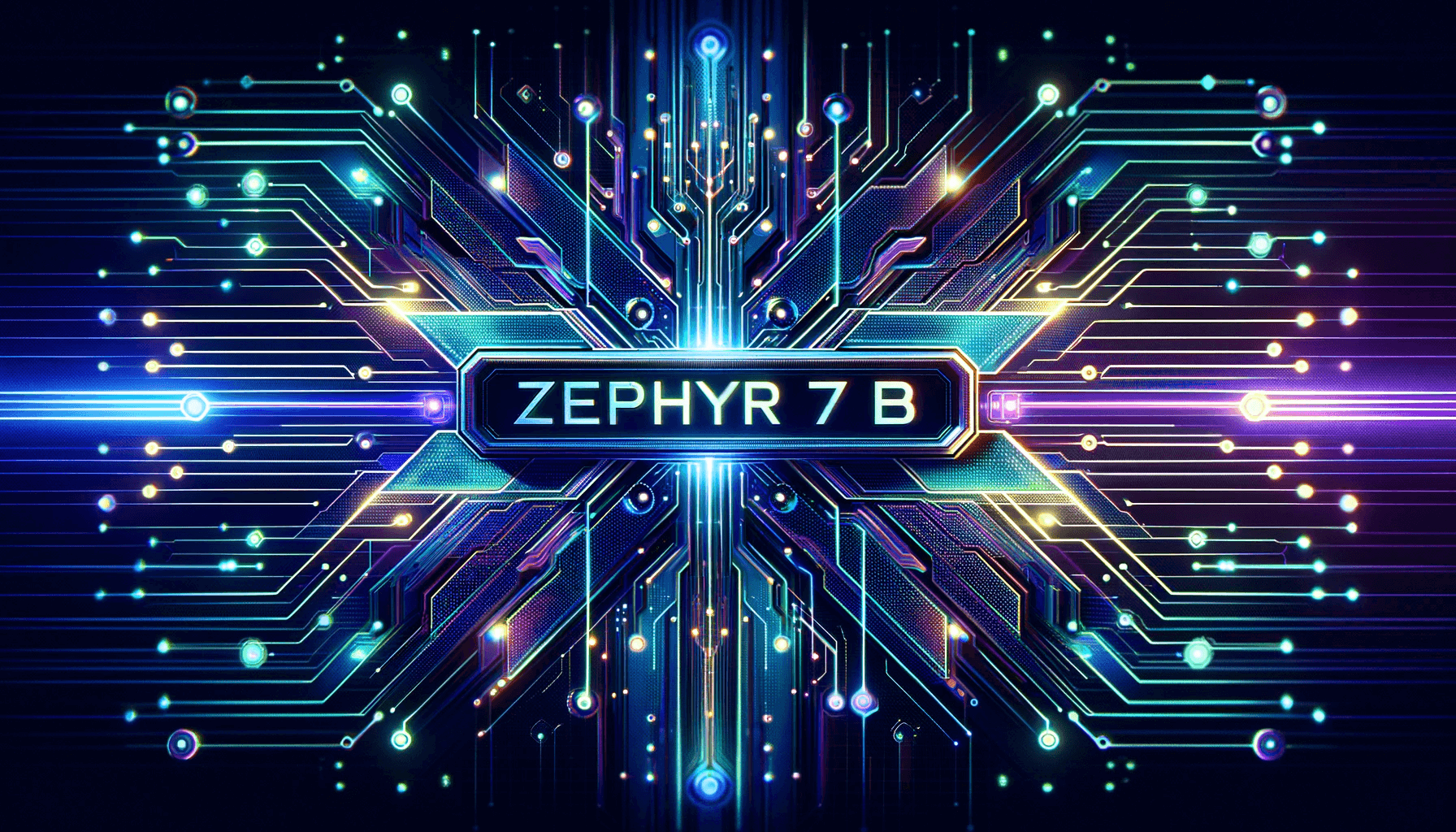

This is what it looks via LM Studio, run locally on my machine.

You can (also) access ZEPHYR-7B easily through the transformers library on HuggingFace. To use it, all you need to do is call the pipeline() function.

# Install transformers from source - only needed for versions <= v4.34

# pip install git+https://github.com/huggingface/transformers.git

# pip install accelerate

import torch

from transformers import pipeline

pipe = pipeline("text-generation", model="HuggingFaceH4/zephyr-7b-alpha", torch_dtype=torch.bfloat16, device_map="auto")

# We use the tokenizer's chat template to format each message - see https://huggingface.co/docs/transformers/main/en/chat_templating

messages = [

{

"role": "system",

"content": "You are a friendly chatbot who always responds in the style of a pirate",

},

{"role": "user", "content": "How many helicopters can a human eat in one sitting?"},

]

prompt = pipe.tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

outputs = pipe(prompt, max_new_tokens=256, do_sample=True, temperature=0.7, top_k=50, top_p=0.95)

print(outputs[0]["generated_text"])

# <|system|>

# You are a friendly chatbot who always responds in the style of a pirate.</s>

# <|user|>

# How many helicopters can a human eat in one sitting?</s>

# <|assistant|>

# Ah, me hearty matey! But yer question be a puzzler! A human cannot eat a helicopter in one sitting, as helicopters are not edible. They be made of metal, plastic, and other materials, not food!

Optimizing for Human Alignment

A key challenge is aligning large language models’ responses with human preferences. Without proper tuning, even capable models can miss nuances.

Team behind Zephyr-7B worked to overcome this limitation through comprehensive benchmarking and testing. Incorporating benchmarks like MT-Bench directly into the training process helped optimize Zephyr-7B’s outputs to satisfy human sensibilities.

Cutting-Edge Development Process

Creating Zephyr-7B required an extensive development process including:

- Massive data training to build foundational skills

- Human-in-the-loop techniques like feedback loops and preference modeling

- Multi-stage distillation to transfer ensemble learnings

- Careful calibration to align outputs with human standards

This massive scale combined with human guidance produced Zephyr-7B’s refined performance. Learn more this great resource.

Innovative Fine-Tuning

Zephyr-7B utilizes cutting-edge fine-tuning techniques like:

- Distilled Directed Preference Optimization (DDPO) to learn directly from human judgments

These methods maximize the model’s capabilities.

Extensively Evaluated Performance

Extensive benchmarking quantified Zephyr-7B’s proficiencies in:

- Multi-turn dialog with contextual details

- Strong grammatical capabilities

- State-of-the-art results in semantic parsing, speech recognition, and more

This empirical testing validated Zephyr-7B’s versatile competencies and readiness.

Outlook on Ethics and Alignment

While Zephyr-7B demonstrates technological advancements, work remains regarding ethical alignment. As the model evolves, development should continue focusing on aligning its capabilities with human values. Responsible application in research and education is crucial.

In summary, Zephyr-7B marks notable progress in language processing over Mistral-7B. But realizing the full potential of AI like Zephyr-7B requires an equally rigorous focus on beneficent outcomes guided by human wisdom.